STRUCTURES OF EMOTION

first performed on August 1, 2021

Downtown Santa Cruz, Santa Cruz, CA

performed once in 2021

AVITAL MESHI / TREYDEN CHIARAVALLOTI

San Jose, CA / Cambridge, MA

416236483a416236483v416236483i416236483t416236483a416236483l416236483m416236483e416236483s416236483h416236483i416236483@416236483g416236483m416236483a416236483i416236483l416236483.416236483c416236483o416236483m

AvitalMeshi.com

STRUCTURES OF EMOTION

AVITAL MESHI / TREYDEN CHIARAVALLOTI

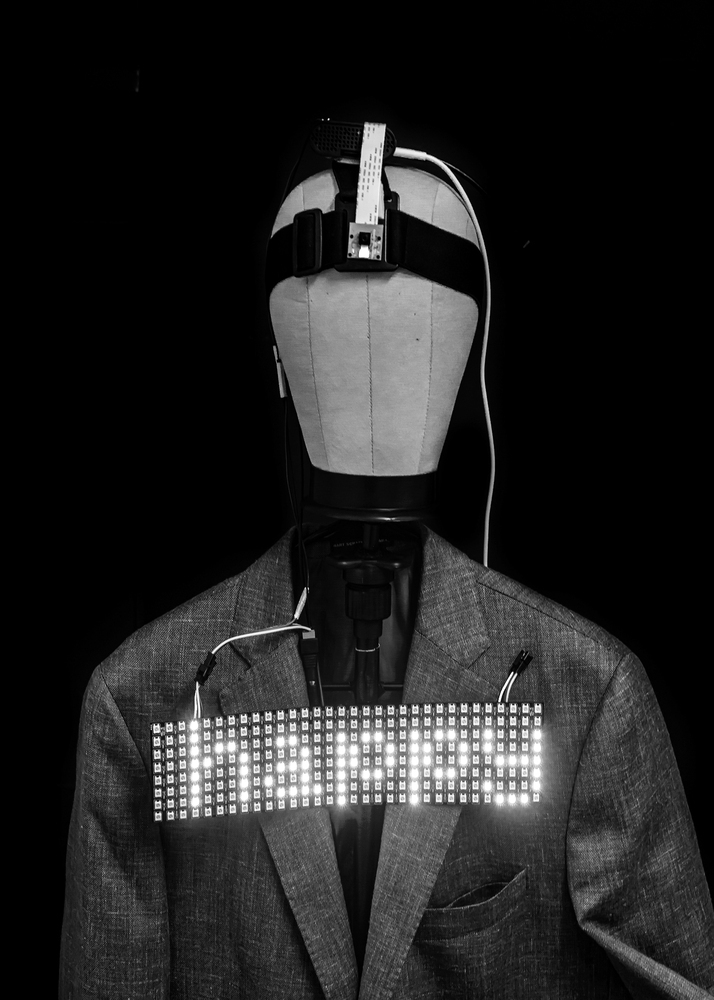

When we see someone smiling, does it necessarily mean that this person is “happy?” “Structures of Emotion” is a performance that examines how humans and machines read and interpret emotional expressions. The work realizes the difficulty of translating “feelings” into words. The performance uses an artificial intelligence (AI) based wearable device that recognizes a person’s face and estimates their emotions in real-time. At the same time, a human imitates this action by looking at that person and attempting to assess this person’s emotions. The intention behind the design of the wearable piece was to form a chimeric biotechnological body equipped with abilities that are beyond human. The person wearing it is essentially capable of processing reality through two different perspectives; their biological senses and the lens of a pre-trained AI algorithm which is based on an expansive dataset. With that, it is clear that the current state of the technology is still immature, and it carries with it biases and faults that bring to mind pseudo-scientific physiognomic practices. Using both AI analysis and human analysis of facial expressions reminds us that the technology is far from maturing beyond its maker and that both humans and machines still have much to learn. While acknowledging potential inaccuracies, we decided to continue using this particular technology to speculate a future in which its design is improved. By attaching it to the body, we aspired to situate the knowledge that comes with it and attempted to reemphasize vision. Inspired by Donna Haraway’s notion of “situated knowledges,” our goal was to stress the partiality of both the human and the technology and show that “all eyes, including our own organic ones, are active perceptual systems, building in translation and specific ways of seeing.”

The performance took place both in-person and online. The in-person version was a street performance in which we walked through the streets of downtown Santa Cruz and interacted with passersby. The online version was set in advance with participants who wished to interact with the system. With both versions, the entanglement between the human and machine led to affective loops in which participants performed their behaviors, made faces, and tried to “score” all the emotional expressions that the AI system could recognize. This behavior opens a discussion regarding the potential of AI systems to “train” humans and forces them to perform different behaviors.